Scrapy is an open-source web crawling framework written in Python. It is designed to help developers scrape and extract data from websites in a structured and organized way. Scrapy allows developers to write code that automates the process of navigating through websites, following links, and extracting data.

Scrapy is widely used in a variety of applications, including web scraping, data mining, and web automation. It is popular for its flexibility, extensibility, and scalability. Scrapy can handle large-scale web scraping projects with ease, making it a great tool for data analysts, researchers, and developers.

Here's a guide on how I set it up and got it working.

Install Scrapy

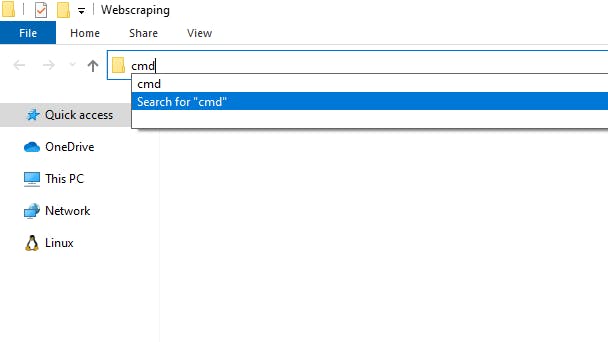

Create a new folder in your local computer and open the command prompt. You can do it by typing cmd in the top bar and hitting enter.

Now in the command line, you can run this command.

pip install Scrapy

This will install Scrapy to your python distribution. You can check for installation using the command,

Scrapy --help

Start New Project

Now to start a project you need to run another command on the cmd.

Scrapy startproject <projectname>

# Write your own project name at the place <projectname>

So I am going to name my project "web_scraping". (Note: Project name could only contain letters, alphabets and underscore)

After running the above command, I got my project in my desired location.

Voila!!!!

Now let's work on it. Click here to learn how to scrap your first website.